Building GenAI Apps with MongoDB Atlas Vector Search

Wednesday, March 5, 2025

What is MongoDB?

MongoDB is one of the most popular NoSQL databases, falling under the category of document databases. In MongoDB, data is stored in flexible, JSON-like documents. This makes it easy to model complex, hierarchical data structures without the constraints of relational tables.

Key Concepts in AI

- Artificial Intelligence: At its core, AI enables machines to replicate aspects of human intelligence, such as learning, reasoning, and problem-solving. This overarching field encompasses several specialized disciplines, each contributing to the broader AI ecosystem.

- Machine Learning (ML): ML is a subset of AI that focuses on training algorithms to learn from data and improve over time without being explicitly programmed. For example, a recommendation engine predicting user preferences on streaming platforms is powered by ML.

- Deep Learning: Inspired by the neural networks of the human brain, deep learning leverages multi-layered neural networks to identify patterns and make decisions. Tasks such as image recognition and voice synthesis rely heavily on deep learning techniques.

- Generative AI: Generative AI harnesses advanced ML models to create new and original content, including images, music, and text. Tools like ChatGPT or MidJourney exemplify the potential of generative AI, reshaping industries like content creation and design.

- Natural Language Processing (NLP): NLP bridges the gap between human language and computers, enabling machines to understand, interpret, and respond to textual or spoken input. Applications such as sentiment analysis and chatbots are powered by NLP models.

- Large Language Models (LLMs): A specialized class of NLP models, LLMs are designed to process and generate human-like text by recognizing patterns, understanding context, and making predictions. For instance, GPT (Generative Pre-trained Transformer) models have demonstrated remarkable capabilities in generating coherent and contextually relevant responses.

A Brief History of Models

Types of Neural Networks

Feedforward Neural Network (FNN):

- In FNNs, connections between nodes are linear and move forward, from input to output.

- These networks are ideal for tasks like classification, where input data is mapped directly to output predictions without the need for temporal context.

Recurrent Neural Network (RNN):

- RNNs also move forward through nodes but have the ability to loop backward, creating connections that allow the network to retain information from previous steps.

- These networks are well-suited for tasks in natural language processing (NLP), where the order of input data is critical. For example, understanding the context of a sentence requires awareness of preceding words.

- Using Long Short-Term Memory (LSTM) units, RNNs can handle long input sequences more effectively by maintaining a memory of previous inputs, overcoming the limitations of traditional RNNs.

Transformer Model:

- Unlike RNNs, transformers process data in parallel rather than sequentially, dramatically improving efficiency and scalability.

- The transformer model is composed of an encoder and a decoder, both of which include multiple layers designed to calculate predictions.

- These layers integrate existing concepts like feedforward neural networks and attention mechanisms to analyze and generate data effectively.

- Transformers are foundational to modern AI advancements, powering models like GPT and BERT for applications ranging from translation to conversational AI.

Sequence to Sequence Models, Encoders, and Decoders

Sequence to Sequence Models:

- These models utilize both an encoder and a decoder, making them ideal for tasks that require transforming one sequence into another, such as translation, text summarization, and question-answering.

- Popular models in this category include BART and T5, which excel in handling complex language generation tasks while maintaining context and coherence.

Encoder Models:

- Encoder models focus solely on the encoder component of the transformer architecture.

- They take input sequences and return embeddings — numerical representations of data.

- With bi-directional attention, these models can understand the context from both preceding and succeeding words, making them highly effective for tasks like word classification, entity recognition, and extracting answers.

- Popular encoder models include BERT, ALBERT, and Electra.

Decoder Models:

- Decoder models take embeddings generated by encoders and produce responses or outputs.

- These models are unidirectional, meaning they process input in a single direction, which is advantageous for tasks like text generation.

- Decoder models are widely used for generating coherent and context-aware text, such as creative writing, conversational agents, or storytelling.

- Popular examples include GPT models, which are known for their exceptional language generation capabilities.

Using Vector Search for Semantic Search

Vectors and Dimensions

Vectors:

- Vectors are numerical representations of unstructured data such as text, images, and audio.

- This data is stored as an array of floating-point values, where each value represents a dimension.

- Vectors enable machine learning models to process and analyse complex datasets by transforming unstructured inputs into structured numerical forms.

Dimensions:

- Dimensions refer to the attributes of data represented within a vector.

- For example, in a text vector, dimensions might represent specific words, phrases, or semantic characteristics.

- Higher-dimensional vectors often provide richer representations but can also increase computational complexity.

Sparse Vector

- Sparse vectors are numerical representations of data where most values are zero.

- They hold a small amount of data, making them efficient for specific applications like text searches. For example, MongoDB Atlas Search utilizes sparse vectors for indexing and querying large text datasets.

- A key method for generating sparse vectors is Term Frequency-Inverse Document Frequency (TF-IDF), which evaluates the importance of words within a document relative to a collection of documents.

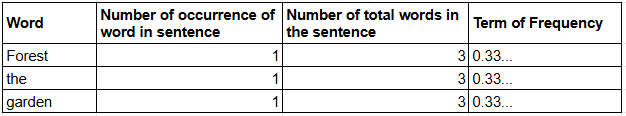

- Term Frequency (TF): Measures how often a word appears in a document.

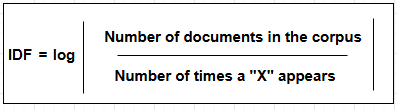

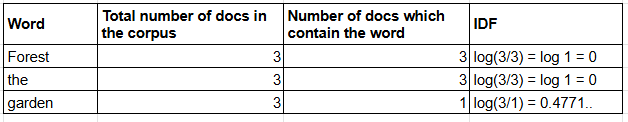

- Inverse Document Frequency (IDF): Assesses how unique or rare a word is across all documents in a corpus.

- By combining these metrics, TF-IDF assigns a weight to each term, enabling effective text retrieval and ranking.

Term Frequency — Inverse Document Frequency = TF * IDF

Let’s discuss the example to get a clear idea of this concept.

Statement 01 — Forest the green

Statement 02 — Forest the dense

Statement 03 — Forest the old

Let's do the Term Frequency Calculation (TF)

Let’s do the Inverse Document Frequency (IDF)

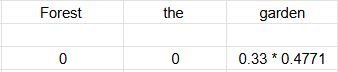

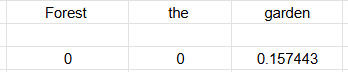

Let's see the multiplication of TF and IDF

TF(Term Frequency Calculation) * IDF (Inverse Document Frequency)

Dense Vector

- Dense vectors are compact numerical representations where most values are non-zero.

- These vectors are ideal for semantic search, enabling systems to capture nuanced relationships and meanings between data points.

- Dense vectors are extensively used in MongoDB Atlas Vector Search, where they allow advanced similarity matching for tasks like recommendation engines and semantic queries.

- Unlike sparse vectors, dense vectors capture complex relationships and are generated by embedding models based on transformer architectures. These embeddings encapsulate semantic meanings, making them powerful tools for modern AI applications.

The transformer model type is in embedding mode as the example below demonstrates:

- Forest is a vast expanse of trees and wildlife, offering tranquility and shelter.

- Forest is also a symbol of growth and interconnectedness, mirroring the resilience of natural ecosystems.

These two sentences have the same word but with different meanings. Once input to the embedded model it will generate different numbers based on the meaning.

How to create embeddings for the data

The accuracy of a model directly correlates with the quality of its embeddings. Embeddings represent data in a way that highlights their relationships and underlying patterns, enabling machine learning models to process and analyze them effectively.

- A common misconception is that increasing the number of dimensions in an embedding automatically improves results. However, the optimal dimensionality depends on the specific use case, and experimenting with different models is crucial to achieving the best outcomes.

- MongoDB Atlas Vector Search supports embeddings with up to 4,096 dimensions, providing ample flexibility for a wide range of applications.

- A key advantage of MongoDB is that vector embeddings are stored alongside the rest of the data within the same database. This eliminates the need for secondary databases dedicated to storing embeddings, streamlining both development and maintenance processes.

Indexing algorithms

1. Hierarchical Navigable Small-World Graphs (HNSW):

- MongoDB uses HNSW graphs to index embeddings in Atlas Vector Search.

- These graphs combine short and long links to create an efficient structure for searching similarities.

- HNSW performs Approximate Nearest Neighbor (ANN) searches, avoiding the need to evaluate every point in the graph, making it highly efficient.

2. Skip List:

- A skip list is similar to a sorted linked list but consists of multiple layers, allowing for faster search operations.

3. Limitations of Vector Search Index:

- Vector indexing is limited to vector embedding fields and cannot be applied to array fields within documents.

- Developers should ensure their data structure aligns with these constraints to maximize search performance and accuracy.

Incorporating these indexing techniques, MongoDB provides a robust infrastructure for GenAI applications, enabling developers to handle complex queries and deliver high-quality results.

db.movies.createSearchIndex(

"vectorPlotIndex",

"vectorSearch",

{

"fields": [

{

"type": "vector",

"path": "plot_embedding",

"numDimensions": 1536,

"similarity": "euclidean | cosine | dotProduct"

}

]

}

);

We can use the below options for the similarity attribute in the createSearchIndex() in MongoDB

Euclidean — uses the distance between vectors in a multidimensional space

Cosine — uses the angle between vectors

dotProduct — uses the angle between the vectors and considers the magnitude. To use the dotProduct vector, it must be normalized to unit length at index and query time.

Creating a Search Query Using a Vector Search

To perform a nearest neighbour search on embeddings, MongoDB Atlas provides the $vectorSearch operator. This operator is integral to performing vector similarity queries, and it is crucial to use the same model that was employed to index the data to ensure consistency and accuracy.

Hybrid Search

Hybrid search combines traditional full-text search and semantic search to deliver comprehensive and contextually relevant results. MongoDB Atlas employs reciprocal rank fusion to assign scores to both Atlas Search and Atlas Vector Search components. By combining the strengths of both methods, hybrid search ensures that results are both relevant and meaningful, typically yielding optimal outcomes with scores between 1 and 60.

Fusion Score = Atlas Search Score + Atlas Vector Search Score

Using Atlas Vector Search for Retrieval Augmented Generation Applications

What is RAG?

Retrieval Augmented Generation (RAG) boosts Large Language Models (LLMs) by letting them access and use external information like documents or databases. This makes their responses more accurate and relevant, especially for tasks like answering questions or creating content.

Chatbot is a common implementation of RAG but RAG is not only used for chatbots

How does RAG work?

The user provides a vectorized query that is used to perform a vector search on the chunks of data in the MongoDB database. Chunks of data are returned and combined with the original query to form a prompt which is sent to the LLM. The LLM then uses the chunks of data along with its own data to generate a response to the query

Why Do We Need Retrieval-Augmented Generation (RAG)?

Large Language Models (LLMs) have transformed the landscape of AI-driven applications, yet they come with limitations that necessitate enhancements like Retrieval-Augmented Generation (RAG). Here’s why RAG is indispensable:

LLM Limitations:

- Hallucination: LLMs can generate incorrect or nonsensical information, especially when faced with ambiguous queries.

- Insufficient or Stale Data: LLMs are typically trained on data up to a specific point in time, making them unsuitable for real-time or domain-specific queries.

- Context Window Overload: The amount of information LLMs can handle in a single prompt is limited by their context window.

- Token Limitations: Each model has a token cap that constrains the length of input and output.

RAG combines embedding and generation models and generates answers based on the retrieved information

AI Integration and Frameworks

Fully Managed Services in AWS

Amazon Bedrock:

- Amazon Bedrock is a fully managed service that is compatible with MongoDB Atlas.

- It allows users to specify data sources for AI models while securely storing chunks and embeddings in Atlas.

- Bedrock can integrate seamlessly with MongoDB Atlas Vector Search, making it an ideal choice for organizations seeking a scalable and secure knowledge base for their AI applications.

Frameworks for RAG Systems

Several frameworks provide robust tools for building Retrieval-Augmented Generation (RAG) systems, each with its unique strengths:

Semantic Kernel:

- Developed by Microsoft, Semantic Kernel is a flexible framework that supports the creation of RAG systems.

- It is not a fully managed service but offers a powerful API for integrating retrieval and generative capabilities in custom AI solutions.

LlamaIndex:

- LlamaIndex (formerly known as GPT Index) facilitates the development of RAG systems by simplifying the integration of knowledge bases with generative models.

- While not a managed service, it provides tools to streamline embedding and retrieval processes, enhancing AI workflows.

LangChain:

- LangChain specializes in chaining together multiple components of an RAG system, from retrieval to response generation.

- Its modular approach allows developers to build complex AI solutions efficiently, leveraging MongoDB Atlas as a robust knowledge store.

Let's come to the practical moment in this journey by creating a simple project using LangChain

First of all need to follow the prerequisites to start the process

- Atlas Cluster Connecting String

- OpenAI API Key

Install the requirements:

pip3 install langchain langchain_community langchain_core langchain_openai langchain_mongodb pymongo pypdf

Preparing Data

Data Ingestion

The process of preparing data for AI involves several critical steps:

1. Identify Data Sources:

Determine where the data resides, such as databases, files, or APIs.

loader = PyPDFLoader(".\sample_files\mongodb.pdf")

pages = loader.load()

2. Prepare the Data:

Ensure the data is complete, consistent, and structured appropriately for the intended use case.

3. Sanitize the Data:

Remove sensitive or unnecessary information.

Convert the data into a consistent format to ensure compatibility with processing pipelines.

Example: Clean pages that contain at least 20 characters of meaningful content.

cleaned_pages = []

for page in pages:

if len(page.page_content.split(" ")) > 20:

cleaned_pages.append(page)

4. Chunk the Data:

Segment the data into manageable pieces for embedding creation.

Methods include:

- By sentences, paragraphs, pages, sections

- Semantically, ensuring logical divisions based on content

Chunking by paragraph ensures that each segment contains coherent and contextually meaningful information.

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=150)

5. Create Embeddings:

Use embedding models to generate numerical representations of each chunk.

schema = {

"properties": {

"title": {"type": "string"},

"keywords": {"type": "array", "items": {"type": "string"}},

"hasCode": {"type": "boolean"},

},

"required": ["title", "keywords", "hasCode"],

}

llm = ChatOpenAI(

openai_api_key=key_param.LLM_API_KEY, temperature=0, model="gpt-3.5-turbo"

)

document_transformer = create_metadata_tagger(metadata_schema=schema, llm=llm)

docs = document_transformer.transform_documents(cleaned_pages)

split_docs = text_splitter.split_documents(docs)

6. Store the Data:

Save both the raw data and the generated embeddings in MongoDB, ensuring seamless integration with the application.

embeddings = OpenAIEmbeddings(openai_api_key=key_param.LLM_API_KEY)

vectorStore = MongoDBAtlasVectorSearch.from_documents(

split_docs, embeddings, collection=collection

)

Retrieving the Data

To retrieve the data we need to follow the below prerequisites

- Atlas Cluster Connection String

- OpenAI API Key

- Data loaded into Atlas

After that,

create file name

dbName = "book_mongodb_chunks"

collectionName = "chunked_data"

index = "vector_index"Create the following vector search index called vector_index on the chucked_data collection

{

"fields": [

{

"numDimensions": 1536,

"path": "embedding",

"similarity": "cosine",

"type": "vector"

},

{

"path": "hasCode",

"type": "filter"

}

]

}In the retrieving process the retriever needs to retrieve chunks related to the query so first needs to point Langchain to our data and embedding in Atlas by setting up a vector store.

from pymongo import from MongoClient

from langchain_mongodb import MongoDBAtlasVectorSearch

from langchain_openai import OpenAIEmbeddings

import key_param

dbName = "book_mongodb_chunks"

collectionName = "chunked_data"

index = "vector_index"

vectorStore = MongoDBAtlasVectorSearch.from_connection_string(

key_param.MONGODB_URI,

dbName + "." + collectionName,

OpenAIEmbeddings(disallowed_special=(), openai_api_key=key_param.LLM_API_KEY),

index_name=index,

)

def query_data(query):

retriever = vectorStore.as_retriever(

search_type="similarity",

search_kwargs={

"k": 3

},

)

results = retriever.invoke(query)

print(results)

Update the query_data function in the rag.py file by passing in a question

query_data("What is the difference between a database and collection in MongoDB?")Add a prefilter to the retrieve

Update the query_data function in the rag.py file to include a pre_filter object.

def query_data(query):

retriever = vectorStore.as_retriever(

search_type="similarity_score_threshold",

search_kwargs={

"k": 3,

"pre_filter": { "hasCode": { "$eq": False } },

"score_threshold": 0.01

},

)

results = retriever.invoke(query)

print(results)

This code generates vector embeddings for a query and uses them to perform a vector search on the chunked_data collection. It also prefilters the data to find chunks without code.

from pymongo import from MongoClient

from langchain_mongodb import MongoDBAtlasVectorSearch

from langchain_openai import OpenAIEmbeddings

import key_param

dbName = "book_mongodb_chunks"

collectionName = "chunked_data"

index = "vector_index"

vectorStore = MongoDBAtlasVectorSearch.from_connection_string(

key_param.MONGODB_URI,

dbName + "." + collectionName,

OpenAIEmbeddings(disallowed_special=(), openai_api_key=key_param.LLM_API_KEY),

index_name=index,

)

def query_data(query):

retriever = vectorStore.as_retriever(

search_type="similarity",

search_kwargs={

"k": 3,

"pre_filter": { "hasCode": { "$eq": False } },

"score_threshold": 0.01

},

)

results = retriever.invoke(query)

print(results)

query_data("When did MongoDB begin supporting multi-document transactions?")

To execute this

python rag.py

Answer generation

In the final step of building our RAG system, we’ll create the answer generation component and put together the entire RAG system.

There are two components of this generation process

- Prompt

- Generative model

Prerequisites

- Atlas Cluster Connection String

- OpenAI API Key

- Data loaded into Atlas

Keep using the above script to add a generation model as well.

Import additional modules:

from langchain.prompts import PromptTemplate

from langchain_core.runnables import RunnablePassthrough

from langchain_core.output_parsers import StrOutputParserCreate a Prompt Template

Here is an example of a template you might create to help the RAG system answer questions:

template = """

Use the following pieces of context to answer the question at the end.

If you don't know the answer, just say that you don't know, don't try to make up an answer.

Do not answer the question if there is no given context.

Do not answer the question if it is not related to the context.

Do not give recommendations to anything other than MongoDB.

Context:

{context}

Question: {question}

"""

Linking the Chain

Let's replace the print(result) section with our RAG chain sequence to put our whole RAG system together:

custom_rag_prompt = PromptTemplate.from_template(template)

retrieve = {

"context": retriever | (lambda docs: "\n\n".join([d.page_content for d in docs])),

"question": RunnablePassthrough()

}

llm = ChatOpenAI(openai_api_key=key_param.LLM_API_KEY, temperature=0)

response_parser = StrOutputParser()

rag_chain = (

retrieve

| custom_rag_prompt

| llm

| response_parser

)

answer = rag_chain.invoke(query)

return answer

Write a query

Update the query_data function in the rag.py file by passing in a question:

query_data("What is the difference between a database and collection in MongoDB?")Final look of our file

from langchain_mongodb import MongoDBAtlasVectorSearch

from langchain_openai import OpenAIEmbeddings, ChatOpenAI

from langchain.prompts import PromptTemplate

from langchain_core.runnables import RunnablePassthrough

from langchain_core.output_parsers import StrOutputParser

import key_param

dbName = "book_mongodb_chunks"

collectionName = "chunked_data"

index = "vector_index"

vectorStore = MongoDBAtlasVectorSearch.from_connection_string(

key_param.MONGODB_URI,

dbName + "." + collectionName,

OpenAIEmbeddings(disallowed_special=(), openai_api_key=key_param.LLM_API_KEY),

index_name=index,

)

def query_data(query):

retriever = vectorStore.as_retriever(

search_type="similarity",

search_kwargs={

"k": 3,

"pre_filter": { "hasCode": { "$eq": False } },

"score_threshold": 0.01

},

)

template = """

Use the following pieces of context to answer the question at the end.

If you don't know the answer, just say that you don't know, don't try to make up an answer.

Do not answer the question if there is no given context.

Do not answer the question if it is not related to the context.

Do not give recommendations to anything other than MongoDB.

Context:

{context}

Question: {question}

"""

custom_rag_prompt = PromptTemplate.from_template(template)

retrieve = {

"context": retriever | (lambda docs: "\n\n".join([d.page_content for d in docs])),

"question": RunnablePassthrough()

}

llm = ChatOpenAI(openai_api_key=key_param.LLM_API_KEY, temperature=0)

response_parser = StrOutputParser()

rag_chain = (

retrieve

| custom_rag_prompt

| llm

| response_parser

)

answer = rag_chain.invoke(query)

return answer

print(query_data("When did MongoDB begin supporting multi-document transactions?"))

Finally, it’s time to run your RAG application and have it answer the question.

Conclusion

AI and Generative AI are shaping the future of technology by unlocking new possibilities in data processing, search, and automation. MongoDB Atlas, with its advanced features like vector search, hybrid search, and seamless integration with embedding models, empowers developers to build intelligent and scalable applications. By combining efficient data preparation, robust indexing algorithms, and modern AI frameworks, businesses can harness the full potential of their data.